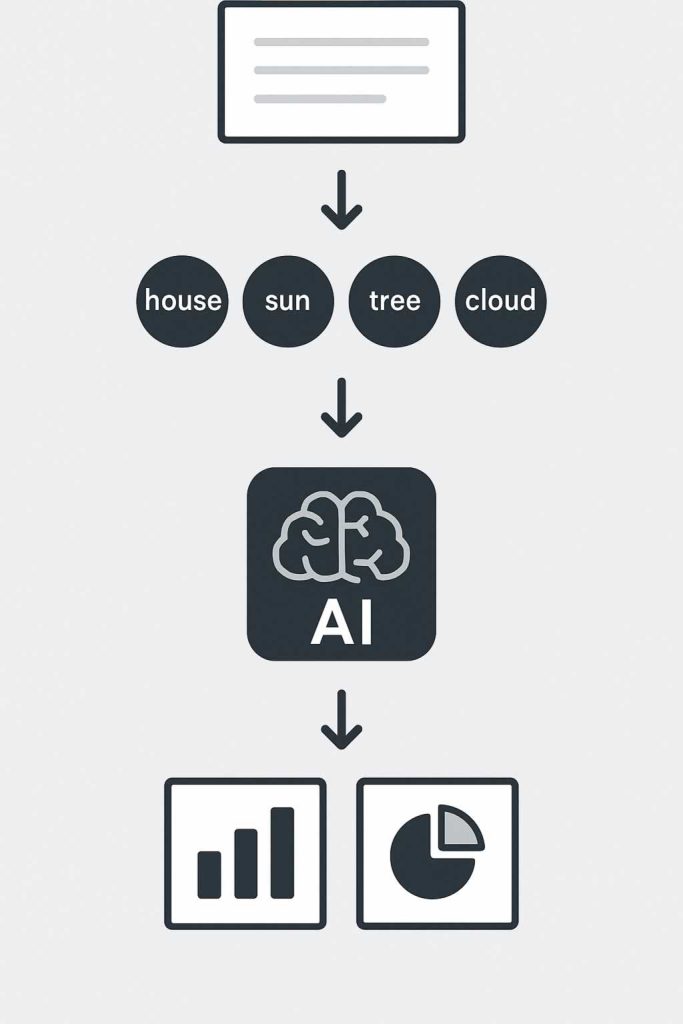

Semantic tokenization is the process of breaking text into meaningful units that reflect not just structure but also intent and context. Unlike basic tokenization, which simply splits text into words or subwords, semantic tokenization aims to capture the meaning behind those elements. At AEHEA, we use semantic tokenization when we want to extract not just surface-level data but also the underlying purpose, emotion, or category of the content. This allows us to design AI systems that respond more naturally and interpret input more intelligently.

Traditional tokenization might split the sentence “Schedule a call for next Tuesday” into subwords like “Schedule,” “a,” “call,” and “for.” Semantic tokenization, however, would recognize that this sentence involves an action (scheduling), an object (a call), and a time reference (next Tuesday). It organizes the sentence into components that reflect real-world meaning, making it easier for downstream models to perform tasks like intent recognition, dialogue handling, or task automation. This is particularly useful in chatbot systems and voice assistants where precision and nuance matter.

To implement semantic tokenization, we often rely on natural language understanding models or rule-based processing layers. These tools recognize patterns in syntax and meaning, classifying phrases according to function for example, identifying names, dates, commands, or emotional tone. This kind of tokenization is more complex than basic text splitting, but it enables AI systems to perform more advanced reasoning. At AEHEA, we use this technique in client-facing applications where understanding user intent is essential to delivering a useful response.

Semantic tokenization is especially valuable when the AI system needs to take action based on the input it receives. It allows the model to go beyond word matching and begin interpreting structure, context, and purpose. Whether we are designing AI that responds to natural language commands, summarizes legal text, or routes customer inquiries, semantic tokenization gives us the foundation to build systems that understand what people mean, not just what they say. It is a crucial layer between raw input and meaningful AI interaction.