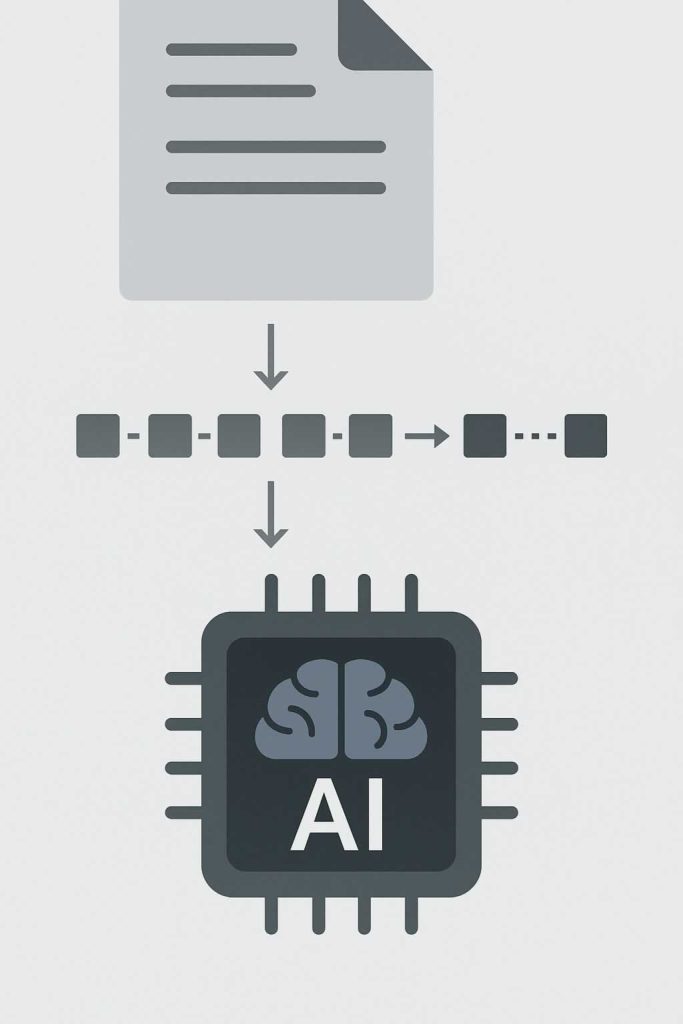

Existing digital content can be tokenized for use in AI, and this process is essential when preparing data for training or interacting with language models. At AEHEA, we regularly convert documents, emails, website text, and structured data into tokenized formats that AI models can read and respond to. Tokenization allows us to take raw human language or structured text and break it into components that align with how an AI model understands input. This is the foundation for using existing content in tasks like classification, summarization, translation, and more.

The process begins with identifying and extracting the content. This might involve parsing a PDF, cleaning text from a database, or scraping a webpage. Once the content is accessible in plain text form, we apply a tokenizer a tool designed to convert text into a list of tokens, which are the units the model will interpret. Each token is mapped to a numerical identifier in the model’s vocabulary. This conversion allows the model to analyze the text, generate predictions, or produce a response. For models like GPT, the number of tokens directly affects how much content can be processed at once.

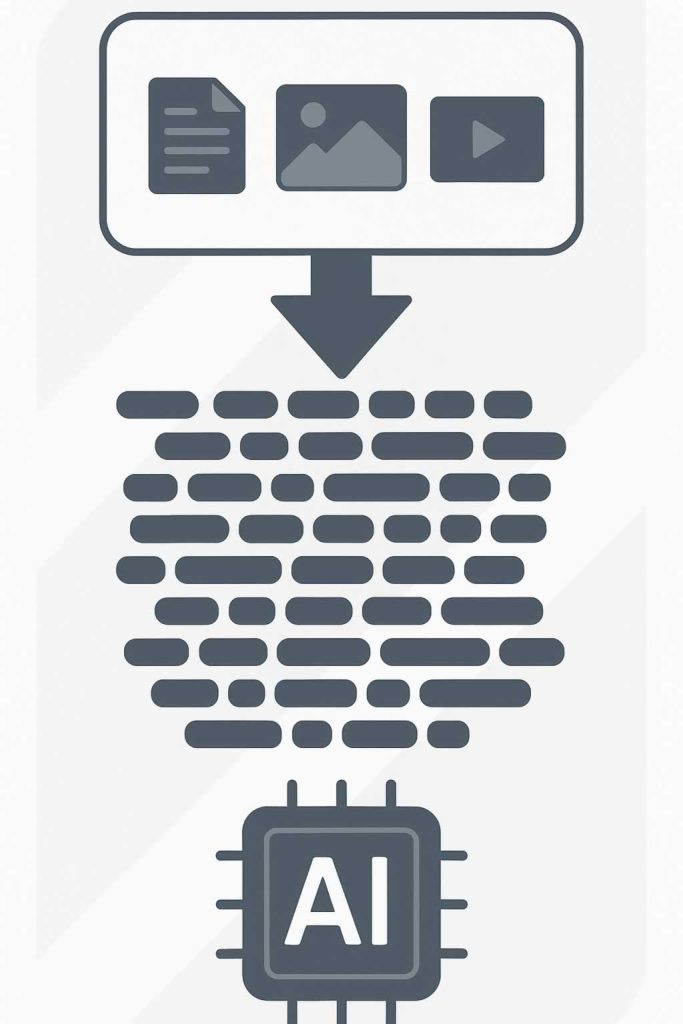

Not all content needs to be rewritten or simplified before tokenization. Most tokenizers handle natural language well, including punctuation, special characters, and technical terms. However, we often optimize or truncate content for models with input limits. This is particularly important when working with long-form content like reports, transcripts, or user manuals. By summarizing or segmenting material before tokenization, we ensure the model receives only the most relevant information. Tools from platforms like OpenAI or Hugging Face allow us to perform this task efficiently and at scale.

At AEHEA, we use tokenization to transform digital assets into structured, AI-ready formats that unlock new capabilities. Whether the goal is to train a custom model, power a chatbot, or extract insights from existing data, tokenizing content bridges the gap between static files and dynamic, intelligent systems. It allows us to reuse and repurpose what businesses already have, turning information into action through the lens of artificial intelligence.